About 10 years ago, as Technical Editor for ReliablePlant and Machinery Lubrication magazines, I conducted a reader survey around the question “What goes wrong in your plant?” That question and insight gained from the responses continue to be relevant for members of today’s reliability, availability, and maintenance (RAM) community.

In setting up the survey, I incorporated the failure-cause categories outlined in DOE STD 1004—the standard for conducting root-cause analysis in the nuclear power industry. (Note: DOE STD 1004 is applicable to any industry, and available as a free, downloadable PDF through standards.doe.gov.)

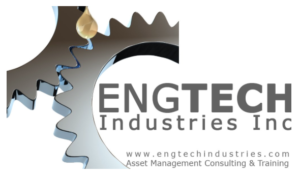

I wasn’t surprised by the results. Between 75% and 80% of all failures can be attributable to human factors (Fig. 1). Topping the list was the lack of or ineffective procedures. Personnel/human error came in a close second. Lack of training and supervision combined for 23%. Design errors rounded out the human-errors component with just under 9%. Equipment and material problems (non-human causes) came in at just under 20%.

About 70% of the reported human failures were organizational artifacts, where the organization failed to provide properly designed equipment, procedures and training about its proper operation and maintenance and supervision to ensure that work is done correctly. Let’s delve into these factors in more detail.

Fig. 1. What goes wrong in the plant? Results based on DOE STD 1004 criteria.

GET YOUR CHEESE IN LINE

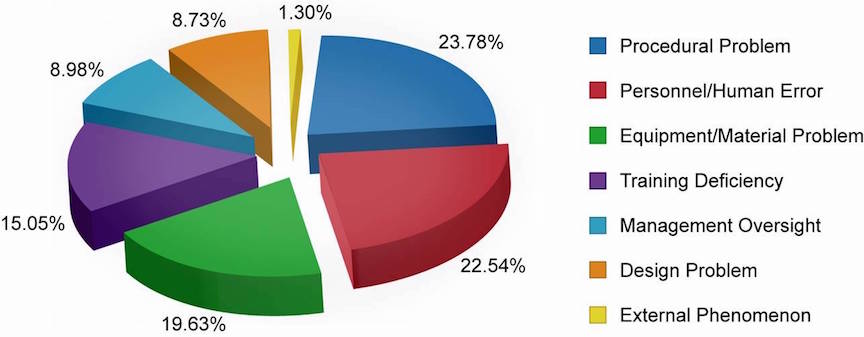

In his seminal book Human Error (1990), George Reason elegantly analogized human error to the holes found in Swiss cheese. Most organizations have a series of barriers that are intended to prevent people from making mistakes. However, these barriers, which Reason likens to slices of Swiss cheese, aren’t perfect.

Like Swiss cheese, the barriers have holes. Such “holes” could be due to organizational influences, unsafe or inadequate supervision, various preconditions for unsafe (or unreliable) acts, as well as the unsafe or unreliable acts themselves. If the holes in the slices of cheese align just right, an accident is the result (Fig. 2).

Fig. 2. Reason’s “Swiss Cheese Model” elegantly analogizes how adverse events occur.

Our job in managing the human factors of failure is three-fold: First, where appropriate and risk-based, we need more slices of cheese—or more potential barriers. Second, we want to minimize the number of holes in each slice of cheese. Third, we want to minimize the size of each remaining hole.

The Policy Slice

For most organizations, the barriers, i.e., slices of Swiss cheese, start with a policy. Typically signed by the ranking executive, often the CEO, and displayed at the organization’s places of business, a policy represents a public commitment to an objective.

When I enter a plant or company headquarters, I typically see framed policies for safety, environmental performance, and quality/customer satisfaction. Rarely do I see a policy for equipment asset management. I find this perplexing.

Equipment-asset-dependent companies rely on physical assets to achieve profits, increased shareholder value, safety performance, environmental-sustainability performance, quality and customer satisfaction, and any other cooperate dashboard goals. Why wouldn’t the organization have a policy specifying how assets will be designed and acquired, operated, maintained, and, eventually disposed of or reused? Publicly committing to asset management in the form of a policy is a great step toward preventing human error.

The Education & Training Slice

Education and training reflect the next major slice of Swiss cheese on which to focus. Regrettably, most organizations educate and train their people to either comply with regulatory requirements and/or to conform to social norms. Evidence of this fact lies in the fact that when the firm is suffering an economic down cycle, training is typically the first thing to get cut from the budget. What a pity.

Estebe-Lloret, et al. (2018) found a positive and significant relationship between investing in employee education and training and organization performance. This stands to reason because people are a major slice of cheese in Reason’s Swiss Cheese Model, and training them well can significantly reduce the number and size of the holes.

The Technology Slice

Technology can provide an engineered slice of cheese to reduce the risk of human error. Sometimes, the engineered solution comes in the form of automation, which can reduce or, in some cases, eliminate human error, assuming the automation technology is properly engineered, installed, and configured.

In other cases, the technology helps the human by error-proofing a system. This is called Poka-yoke in the Toyota Production System (TPS).

Error-proofing improves the man-machine interface. It can include sensory cues to guide the human worker. For example, a gauge where the acceptable operating error is shaded green and the unacceptable area is shaded red is a very simple technology that helps to prevent human error. Other engineered interventions are much more elaborate.

The Procedures & Checklists Slice

Procedures and checklists represent a very important slice of cheese. As noted previously, lack of or ineffective procedures was cited as the number one cause of failure in my 2010 reader-survey results.

I generally find procedures in the plant to be inadequate—frightfully inadequate. Too often, I encounter vague procedures that frequently lack necessary details on fit, tolerance, quantity, and quality. Consider, for example, inspection PMs that say “Check pressure” or “Check temperature,” when they shouldsay “Verify that temperature/pressure is above/below/between X and/or Y.” It’s unrealistic to expect workers to remember all the details necessary to assure the reliable operation and precision maintenance of plant equipment.

In his book The Checklist Manifesto(2010), Dr. Atul Gawande described his work with the World Health Organization (WHO), which undertook a study at eight hospitals around the world. Researchers evaluated about 4,000 surgeries before implementing a 19-item checklist and about 4,000 after implementation of that checklist.

According to the WHO study, after the checklist was implemented, the post-surgical-complication rate dropped from 1.5% to 0.8% (nearly half of what it had been prior to the checklist’s implementation).

There’s a reason why checklists rule operations and maintenance activities in reliability-critical industries such as aviation and nuclear power. They can significantly enhance the human performance of your team too.

The Supervision Slice

Another major slice of cheese in the human-factors-management model is supervision—“active” supervision, that is. In many plants, supervisors do just about everything but supervise.

Supervision is about setting priorities, allocating resources, coaching, mentoring, and performing work-quality checks. It’s not about running for parts, hunting for tools, or performing the actual work itself. Moreover, supervision occurs on the plant floor, not through email. Often, supervision is the last slice of cheese in preventing human-error-induced mistakes that compromise asset performance, safety, environmental performance and quality.

YOU WANT RELIABILITY?

STOP REWARDING FAILURE.

Motivation is another major component of human-performance management. It’s usually driven by extrinsic and/or intrinsic rewards

Extrinsic rewards revolve around money, benefits, and other tangible rewards. Intrinsic rewards relate to recognition, a sense of accomplishment, being a part of a team, contributing to society, etc. Both motivate people, but in different ways.

Regrettably, many organizations that want improved asset performance are extrinsically and intrinsically rewarding failure. Many employees, particularly maintenance personnel, receive a sizable percentage of their pay from overtime. Asking them to surrender this pay in the interest of asset reliability without some form of replacement is nonsensical.

We reward failure intrinsically, too. For example, after a failure occurs and the tradespeople who are on call make their way to the plant (on overtime pay), repair the equipment, and get the operation back up and running, the plant manager, operations manager, and/or maintenance manager typically seek them out to thank them (intrinsic reward).

I’m certainly not suggesting that the workers referenced in the above example shouldn’t be recognized for their efforts. They should. Rather, I’m wondering who recognizes the lube tech for executing an excellent greasing route? Where are the thanks for the operator who executes a great inspection route and enters a well-written proactive work notification? We need to reward those types of behaviors.

What motivates people to work? In his seminal work, A Theory of Human Motivation, Abraham Maslow (1943) identified a hierarchy of human needs that must be satisfied in succession.

The most basic needs are physiological—the need for food, water, clothing, and shelter. Next come safety needs—the need to protect oneself. Once fed, sheltered, and safe, people seek love and belongingness.

Once loved, people pursue needs associated with esteem and ego. The highest order of psychological development is self-actualization. An easy way to distinguish esteem needs from self-actualization needs is the manner in which one contributes to a charity. If someone contributes with the proviso that a building is named after him or her, an esteem/ego need is being met. If one contributes with complete anonymity, self-actualization is the likely driver.

Frederick Herzberg expanded upon Maslow’s work in his book The Motivation to Work (1959). In his “Two-Factor Theory,” Herzberg reduced Maslow’s hierarchy to extrinsic drivers, which he called “dissatisfiers” and intrinsic drivers, which he called “satisfiers.” In essence, the absence of money, benefits, and other extrinsic rewards will create job dissatisfaction, but they alone can’t produce true job satisfaction.

Herzberg suggested that once employees’ extrinsic needs are met, they must receive the intrinsic rewards of recognition and belonginess to achieve true job satisfaction. A few years later, J. Stacy Adams in his article Inequity in Social Exchange (1965), proposed equity theory, which states that workers apply a fairness test related to extrinsic and intrinsic rewards compared to others in the workplace.

Assessments by employees, however, may not necessarily be accurate or even close to it. Some employee may wildly overvalue their contributions and associated rewards, while others may undervalue theirs.

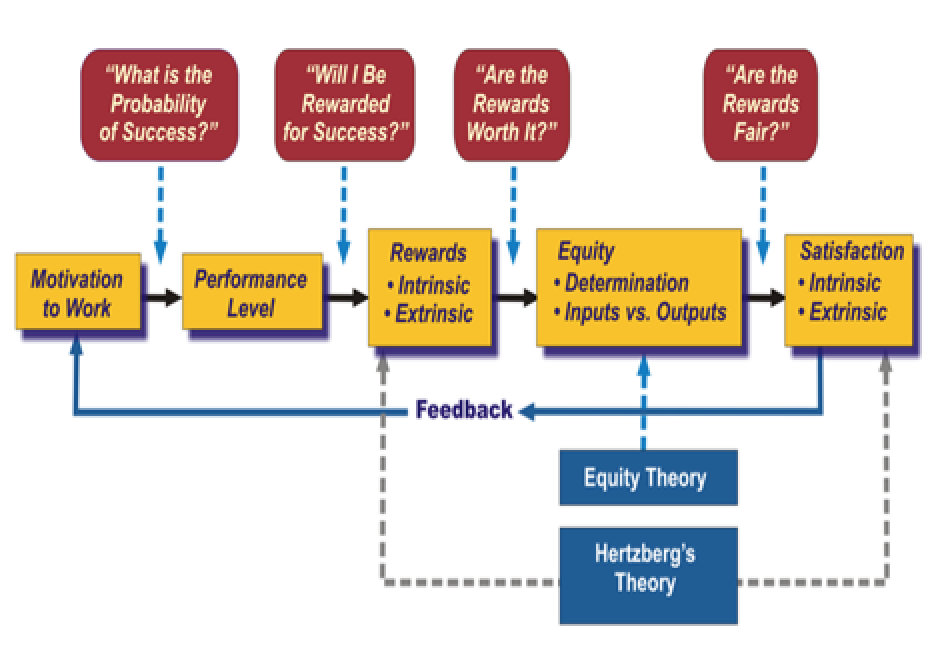

Figure 3 combines the Herzberg and Adams theories into a single model. Employees ask themselves a series of questions, which ultimately determine the effort they put forth.

First, they ask if they can succeed in the job. If they can’t succeed, they won’t be motivated, no matter how big the carrot is. Next, they ask if they will be rewarded. They’ll consider extrinsic rewards like money and intrinsic rewards such as recognition. Finally, they ask if the rewards being offered are of value to them.

If the answer to all three of the above questions is yes, the employees will perform an equity determination to ascertain if they’re getting their fair share of the rewards. If that answer is yes, you have a motivated employee. If not, the individual will either ask for greater rewards or reduce his or her effort to a level that he or she deems appropriate.

Fig. 3. A combined model of Herzberg’s Two-Factor Theory and Adams’ Equity Theory.

BRINGING IT ALL TOGETHER

The fact is that 75% to 80% of the failures in your plant are due to human factors—mostly organizational artifacts. If you wish to improve the reliability of your equipment assets and, thus, your profit, safety, and environmental and quality performance, you must tackle human performance. That means taking a long, hard look at your policies, education and training, technological/engineered solutions, procedures, checklists and supervision to determine if they are adequate to prevent human error. If they’re not, add slices of cheese, reduce the number of holes in each slice, and the size of each hole that you can’t completely eliminate.

A good plan is to conduct day-in-the-life of (DILO) studies to determine what causes confusion and a lack of clarity for operators, maintainers, and supervisors, i.e.,your front line team. Then, backtrack to the policies, systems, processes, and procedures that represent the root causes. The mistakes occur on the front line, but in 70% of the cases, they’re caused by some latent precursor weakness. Get your data in the DILOs, and then fix the problems upstream.

I can’t overemphasize the importance of addressing your rewards systems. All humans are driven by extrinsic and intrinsic rewards. We’ve all heard the saying “what gets rewarded gets done.” That’s only partially true. In reality, what gets rewarded gets done, but what gets measured is typically what gets rewarded.

Be careful about what you measure, and be certain that your rewards are driving the types of behavior that will reduce human error and drive the reliability of your production assets.TRR

REFERENCES

Adams, J. (1965). Inequity in Social Exchange. Advances in Experimental Social Psychology, 2, 267.

DOE STD 1004 (1992). Root Cause Analysis Guideline Document. U.S. Department of Energy, Washington.

DOE HDBK 1028 (2009). Human Performance Improvement, Volumes I and II. U.S. Department of Energy, Washington.

Esteban-Lloret, N., Aragón-Sánchez, & Carrasco-Hernández. (2018). Determinants of employee training: Impact on organizational legitimacy and organizational performance. The International Journal of Human Resource Management, 29(6), 1208-1229.

Gawande, A. (2010). The Checklist Manifesto : How to Get Things Right (1st ed.). New York: Metropolitan Books.

Herzberg, F. (1959). The Motivation to Work (2d ed., Human Relations Collection). New York: Wiley.

Maslow, A. (1943). A Theory of Human Motivation. Psychological Review, 50, 370.

Reason J. (1990). Human Error.Cambridge University Press. Cambridge, UK.

Troyer, D. (2010). Human Factors Engineering: The Next Frontier in Reliability. Machinery Lubrication magazine. March 2010.

ABOUT THE AUTHOR

Drew Troyer has 30 years of experience in the RAM arena. Currently a Principal with T.A. Cook Consultants, he was a Co-founder and former CEO of Noria Corporation. A trusted advisor to a global blue chip client base, this industry veteran has authored or co-authored more than 250 books, chapters, course books, articles, and technical papers, and is popular keynote and technical speaker at conferences around the world. Among other things, he also serves on ASTM E60.13, the subcommittee for Sustainable Manufacturing. Drew is a Certified Reliability Engineer (CRE), Certified Maintenance & Reliability Professional (CMRP), holds B.S. and M.B.A. degrees, and is Master’s degree candidate in Environmental Sustainability at Harvard University. Email dtroyer@theramreview.com.

Tags: Asset management, equipment failure, human failure